IBM Mulls Use Of AI Chip To Lower Watsonx Cloud Service Cost

In an effort to lower cost of Big Blue’s new cloud AI and data platform watson.x, IBM considers use of in-house AI chip

IBM is looking to make its newly launched cloud AI and data platform watson.x more attractive for the cost-conscious business user.

Reuters reported that an executive IBM has stated that Big Blue is considering the use of artificial intelligence chips that it designed in-house to lower the costs of operating a cloud computing service it made widely available this week.

IBM had actually launched its new “watsonx” cloud service in May, and according to Reuters the tech veteran is hoping this new service will allow it to exploit the boom in generative AI technologies of late, after its first major AI system (Watson), launched more than a decade ago, failed to gain market traction.

![]()

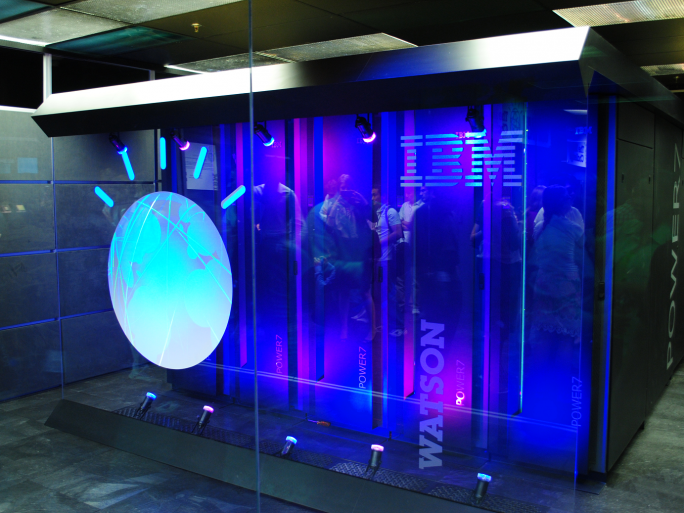

Artificial Intelligence Unit

Now in an interview with Reuters at a semiconductor conference in San Francisco, Mukesh Khare, general manager of IBM Semiconductors, said Big Blue is contemplating using a chip called the Artificial Intelligence Unit as part of its new watsonx cloud service.

According to Khare, one of the barriers the old Watson system encountered was high costs, which IBM is hoping to tackle this time around with watsonx.

Khare reportedly said using its own chips could lower cloud service costs because they are very power efficient.

IBM announced the chip’s existence in October but did not disclose the manufacturer or how it would be used, Reuters noted.

Khare reportedly said the chip is manufactured by Samsung Electronics, which has partnered with IBM on semiconductor research, and that his company is considering it for use in watsonx.

According to the Reuters interview, IBM has no set date for when the chip could be available for use by cloud customers, but Khare said the company has several thousand prototype chips already working.

But Khare reportedly added that IBM was not trying to design a direct replacement for semiconductors from Nvidia, whose chips dominate the market in training AI systems with vast amounts of data.

Instead, IBM’s chip seek to be cost-efficient at what AI industry insiders call inference, which is the process of putting an already trained AI system to use making real-world decisions, Reuters reported.

“That’s where the volume is right now,” Khare reportedly said. “We don’t want to go toward training right now. Training is a different beast in terms of compute. We want to go where we can have the most impact.”

AI chips

Nvidia dominates the market for chips used in training AIs, with more than an 80 percent share, a position that has caused its market valuation to skyrocket this year amidst a wave of interest in the technology spurred by OpenAI’s ChatGPT and others.

Nvidia’s domination of the AI chip sector is however being challenged by AMD, and last month announced its latest GPU acceleration chip for artificial intelligence (AI) projects, the MI300.

AMD’s MI300 is seen as potentially a direct rival to Nvidia’s top-end H100 chip.

The MI300 is due to begin shipping for some customers later this year, with mass production expected in 2024.