Intel Tunes ‘Ponte Vecchio’ GPU For AI, High-Performance Computing

Intel introduces ‘Ponte Vecchio’, based on its Xe GPU architecture, along with oneAPI open programming model and other supercomputing tech

Intel has announced a new line of discrete graphics chips tuned specifically for high-performance computing and artificial intelligence training.

The “Ponte Vecchio” line of graphics processing units (GPUs), announced at the Supercomputing 2019 (SC19) conference in Denver, Colorado, over the weekend, is based on Intel’s Xe graphics architecture.

Intel has said it’s planning to release its first Xe-based graphics chips for the PC market next year, with Ponte Vecchio units to follow in 2021.

The technology, named after Florence’s Ponte Vecchio bridge, is Intel’s first Xe-based GPU specifically aimed at HPC and AI workloads.

Exascale supercomputing

It is to use Intel’s 7nm manufacturing process, drawing on the company’s Foveros 3D and EMIB packaging technologies, as well as technologies such as high-bandwidth memory and Compute Express Link interconnects.

It is set to be used in the Argonne National Laboratory’s Aurora system, the US’ first exascale supercomputer, which is also scheduled for completion in 2021.

An exascale supercomputer is defined as one capable of handling at least one exaFLOPS, or one billion billion calculations per second.

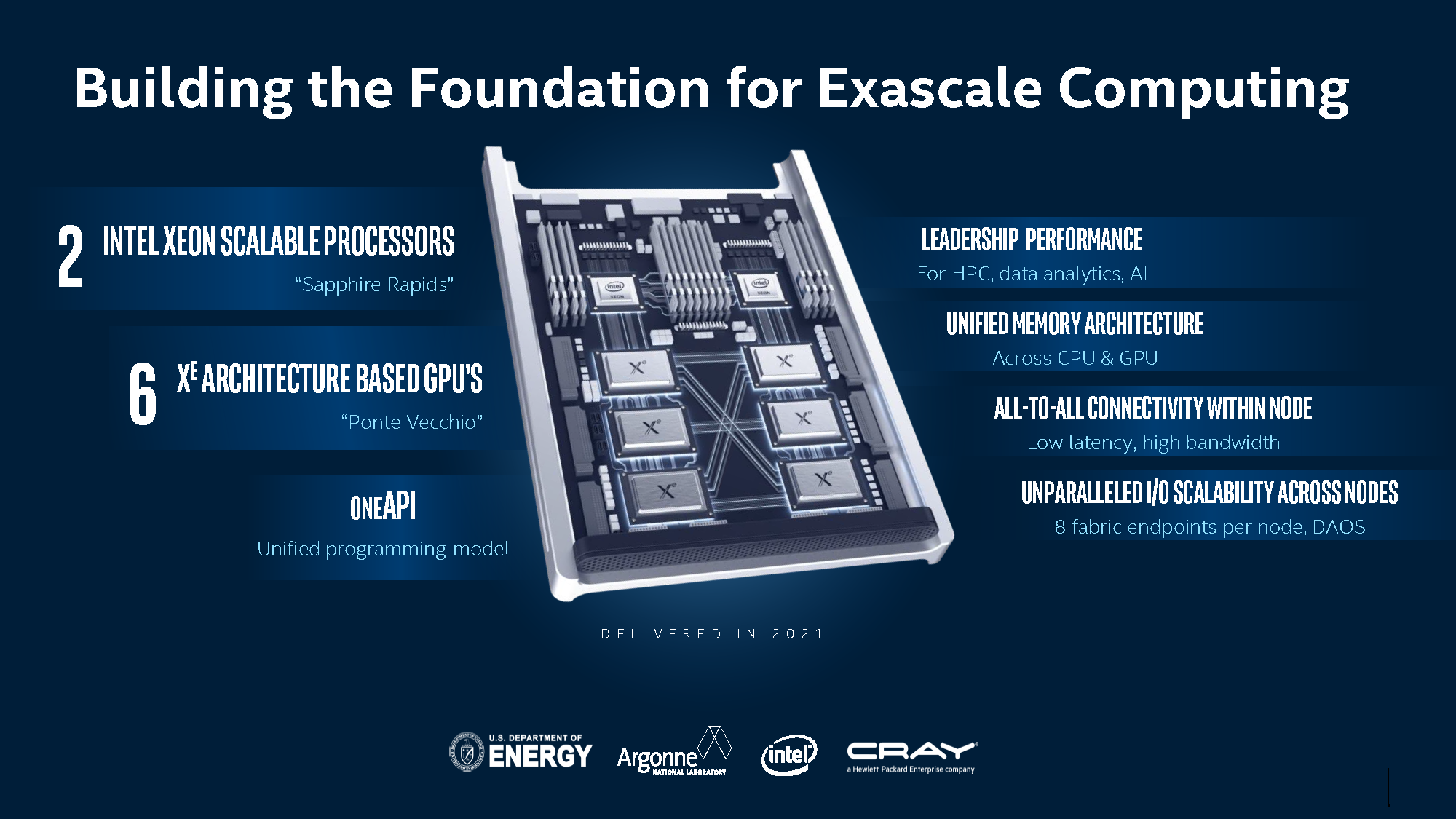

Aurora’s compute node architecture is based on two 10nm-based Intel Xeon Scalable processors, code-named “Sapphire Rapids”, and six Ponte Vecchio GPUs, with the system also featuring Intel’s Optane DC persistent memory and connectivity technologies and Cray’s Slingshot connectivity fabric.

oneAPI

Intel also introduced an open specification called oneAPI aimed at providing a unified model for programming diverse types of computing and accelerator architectures, including GPUs such as Ponte Vecchio.

Intel chief architect Raja Koduri said the company is aiming to help organisations bring together the power of multiple architectures, including CPUs, general-purpose GPUs and FPGAs, as well as specialised deep-learning neural network processors.

“Simplifying our customers’ ability to harness the power of diverse computing environments is paramount, and Intel is committed to taking a software-first approach that delivers a unified and scalable abstraction for heterogeneous architectures,” Koduri said.

Intel introduced its own implementation of oneAPI with test versions of its oneAPI Toolkits available immediately, as well as a oneAPI developer cloud giving access to developer tools for developing, testing and running workloads across a range of Intel CPUs, GPUs and FPGAs.

But the company said it is encouraging cross-vendor adoption with its open model.

Nervana NNP

Intel also demonstrated its new Nervana Neural Network Processor for Training (NNP-T) and a Nervana NNP for inference, adding to its range of AI-focused chips.

The technology, which includes a processor, Thunderbolt and USB ports and a built-in dual-slot PCIe card for network connectivity, is a more powerful version of Intel’s NUC Compute Element, itself a successor to Intel’s Compute Card.

Intel said it would launch its second-generation Optane Data Centre Persistent Memory and SSD and 144-layer 3D NAND memory next year.