Microsoft Offers Deepfake Detection Tool

Effort to combat disinformation campaigns sees Microsoft offer software tool to help identify deepfake photos and videos ahead of US election

Microsoft has released a software tool that can identify deepfake photos and videos in an effort to combat disinformation.

The ability to deliver deep-fake images has been around for a while now. The 2016 film ‘Rogue One: A Star Wars Story’ for example saw Hollywood studios bring Peter Cushing back to life. Subsequent Star Wars films used deepfake technology for Princess Leia after Carrie Fisher passed away.

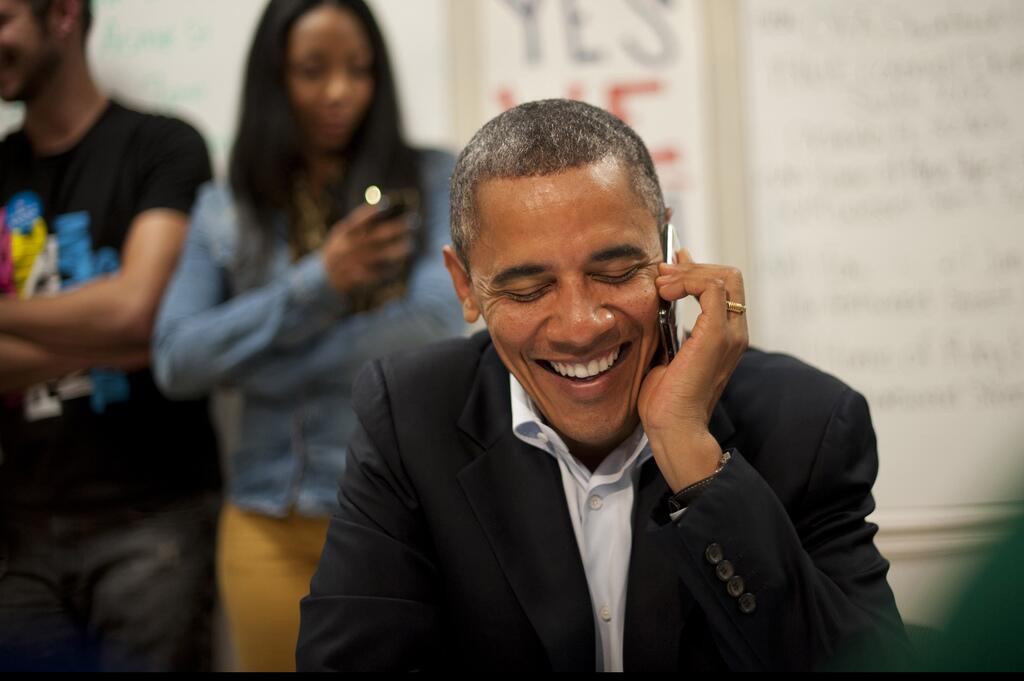

But in the following years deepfake cases have become more sinister, with images of former US President Barak Obama and US President Donald Trump being used in a various misleading videos.

Disinformation campaigns

As part of this effort to combat misinformation, Tom Burt, corporate VP of customer security and trust and Eric Horvitz, chief scientific officer blogged about Microsoft’s response.

“There is no question that disinformation is widespread,” wrote Microsoft. “Research we supported from Professor Jacob Shapiro at Princeton, updated this month, cataloged 96 separate foreign influence campaigns targeting 30 countries between 2013 and 2019.”

“These campaigns, carried out on social media, sought to defame notable people, persuade the public or polarise debates,” it added. “Recent reports also show that disinformation has been distributed about the Covid-19 pandemic, leading to deaths and hospitalizations of people seeking supposed cures that are actually dangerous.”

To combat these disinformation campaigns, Microsoft wants to protect voting through ElectionGuard and help secure campaigns and others involved in the democratic process through AccountGuard.

Deepfake videos

“Disinformation comes in many forms, and no single technology will solve the challenge of helping people decipher what is true and accurate,” wrote Microsoft. “One major issue is deepfakes, or synthetic media, which are photos, videos or audio files manipulated by artificial intelligence (AI) in hard-to-detect ways.”

“They could appear to make people say things they didn’t or to be places they weren’t, and the fact that they’re generated by AI that can continue to learn makes it inevitable that they will beat conventional detection technology,” said Microsoft. “However, in the short run, such as the upcoming US election, advanced detection technologies can be a useful tool to help discerning users identify deepfakes.”

“Today, we’re announcing Microsoft Video Authenticator,” said Redmond. “Video Authenticator can analyse a still photo or video to provide a percentage chance, or confidence score, that the media is artificially manipulated. In the case of a video, it can provide this percentage in real-time on each frame as the video plays.”

Microsoft said it works by detecting the blending boundary of the deepfake and subtle fading or greyscale elements that might not be detectable by the human eye.

“We expect that methods for generating synthetic media will continue to grow in sophistication,” warned Redmond. “As all AI detection methods have rates of failure, we have to understand and be ready to respond to deepfakes that slip through detection methods. Thus, in the longer term, we must seek stronger methods for maintaining and certifying the authenticity of news articles and other media.”

Meanwhile Facebook has previously said it would remove deepfake and other manipulated videos from its platform, but only if it met certain criteria.