Autopilot Flaw Known By Tesla, Elon Musk, Judge Finds

US Judge finds “reasonable evidence” that Elon Musk and other Tesla managers knew of defect in Autopilot system

Tesla and Elon Musk have been handed a legal setback by a US judge, in another legal case concerning the Autopilot driver’s assistance system.

Reuters reported that Judge Reid Scott, in the Circuit Court for Palm Beach County, found “reasonable evidence” that Tesla Chief Executive Elon Musk and other managers knew the EV’s had a defective Autopilot system but still allowed the cars to be driven unsafely.

The potentially damaging ruling last week from the Florida judge also said that the plaintiff in a lawsuit over a fatal crash could proceed to trial and bring punitive damages claims against Tesla for intentional misconduct and gross negligence.

Autopilot lawsuit

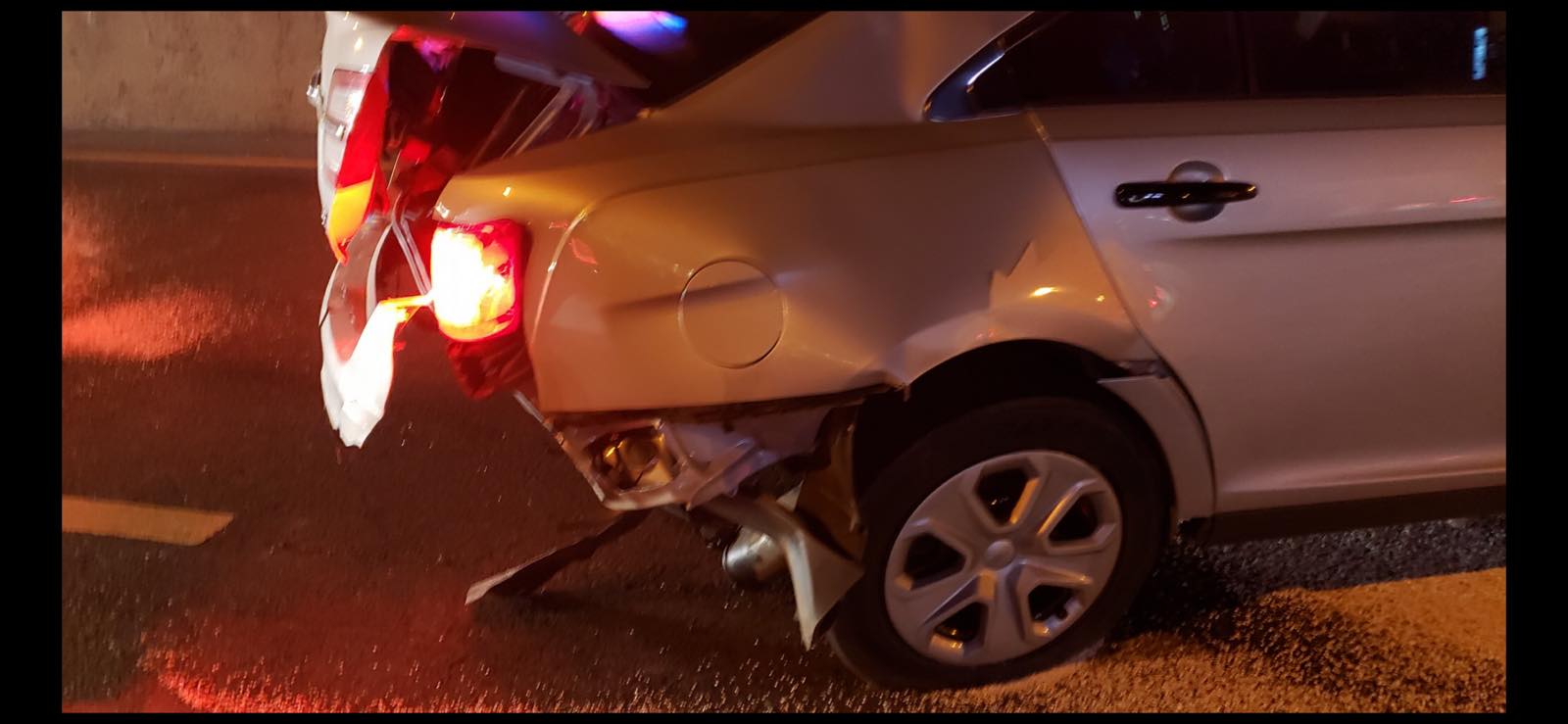

According to Reuters, the Florida lawsuit arose out of a 2019 crash north of Miami in which owner Stephen Banner’s Model 3 drove under the trailer of an 18-wheeler lorry that had turned onto the road, shearing off the Tesla’s roof and killing Banner.

In May 2019, the US National Transportation Safety Board (NTSB) had issued a preliminary report into the fatal Tesla crash of Jeremy Beren Banner, 50, and found that Autopilot had been engaged for 10 seconds before the crash.

The accident of the father of three, had occurred in March 2019 on a highway in Delray Beach, Florida, and the NTSB noted that neither Autopilot nor the driver took evasive action.

Four years later and Bryant Walker Smith, a University of South Carolina law professor, reportedly called the judge’s summary of the evidence significant, because it suggests “alarming inconsistencies” between what Tesla knew internally, and what it was saying in its marketing.

“This opinion opens the door for a public trial in which the judge seems inclined to admit a lot of testimony and other evidence that could be pretty awkward for Tesla and its CEO,” Smith was quoted by Reuters as saying. “And now the result of that trial could be a verdict with punitive damages.”

The Florida judge reportedly found evidence that Tesla “engaged in a marketing strategy that painted the products as autonomous” and that Musk’s public statements about the technology “had a significant effect on the belief about the capabilities of the products.”

Judge Scott also found that the plaintiff, Banner’s wife, should be able to argue to jurors that Tesla’s warnings in its manuals and “clickwrap” agreement were inadequate.

The judge reportedly said the accident is “eerily similar” to a 2016 fatal crash involving Joshua Brown in which the Autopilot system failed to detect crossing trucks, leading vehicles to go underneath a tractor trailer at high speeds.

“It would be reasonable to conclude that the Defendant Tesla through its CEO and engineers was acutely aware of the problem with the ‘Autopilot’ failing to detect cross traffic,” the judge reportedly wrote.

A Tesla spokesperson could not immediately be reached for comment on Tuesday, Reuters reported.

Legal cases

The ruling is a setback for Tesla after the company won two product liability trials in California earlier this year over the Autopilot driver assistant system.

Earlier this year the firm won its first jury trial for a non-fatal Autopilot-linked accident, in a case in which a woman said the feature caused her Model S to suddenly veer into the centre median of a Los Angeles street.

And then earlier this month Tesla won another in a series of US lawsuits (and federal investigations) over fatal accidents and its Autopilot driver assistance system.

The civil court legal action filed in Riverside County Superior Court in California, had alleged Tesla’s Autopilot system had caused 37 year-old Micah Lee’s Model 3 to suddenly veer off a highway east of Los Angeles at 65 miles per hour (105 km per hour) back in 2019.

The EV then struck a palm tree and burst into flames, but a jury found that Tesla was not to be liable for the death of Micah Lee.

The National Highway Traffic Safety Administration (NHTSA) meanwhile continues its investigation of Autopilot, after identifying more than a dozen crashes in which Tesla vehicles hit stationary emergency vehicles.

Tesla side

Tesla for its part says Autopilot is intended for use on highways and limited-access roads, although the system is not disabled in other environments.

It says drivers are repeatedly informed that they must remain alert and ready to take over from Autopilot at a moment’s notice. The drivers involved in accidents were not paying attention, the company has previously said.

But critics say Tesla has lulled drivers into a false sense of security with public statements about Autopilot’s capabilities and with a product design that allows their attention to wander while the system controls the vehicle.